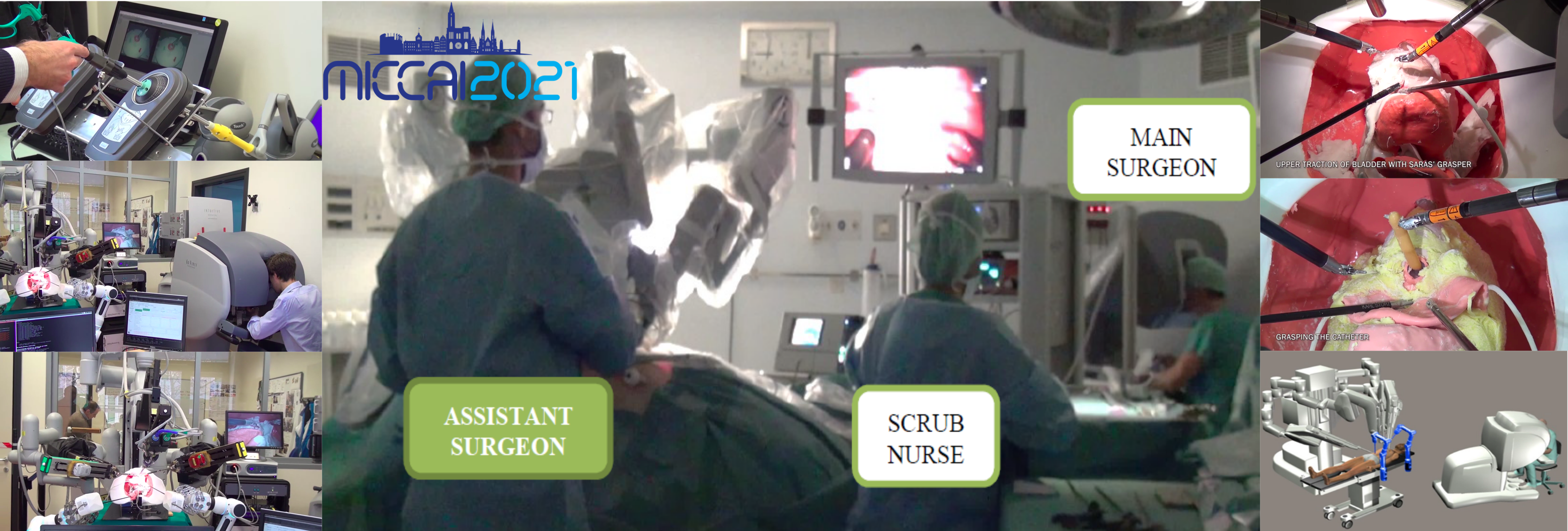

SARAS challenge on Multi-domain Endoscopic Surgeon Action Detection ---SARAS-MESAD---¶

This challenge is part of the MICCAI 2021 conference. The conference will be held from September 27 - October 1, 2021 in Strasbourg, FRANCE.

Description¶

Minimally Invasive Surgery (MIS) involves very sensitive procedures. The success of these procedures depends on the individual competence and degree of coordination between the surgeons. The SARAS (Smart Autonomous Robotic Assistant Surgeon) EU consortium is working on methods to assist surgeons in MIS procedures by devising deep learning models able to automatically detect surgeon actions from streaming endoscopic video. This challenge is built on our previous challenge SARS-ESAD organized in MIDL 2020.

Despite its huge success, deep learning suffers from two major limitations. Firstly, a task (e.g., action detection in radical prostatectomy) requires one to collect and annotate a large, dedicated dataset to achieve an acceptable level of performance. Consequently, each new task requires us to build a new model, often from scratch, leading to a linear relationship between the number of tasks and the number of models/datasets, with significant resource implications. Collecting large annotated datasets for every single MIS-based procedure is inefficient, very time-consuming and financially expensive.

In our SARAS work, we have captured endoscopic video data during radical prostatectomy under two different settings ('domains'): real procedures on real patients, and simplified procedures on artificial anatomies ('phantoms'). As shown in our SARAS-ESAD challenge (over real data only), variations due to patient anatomy, surgeon style, and so on dramatically reduce the performance of even state-of-the-art detectors compared to nonsurgical benchmark datasets. Videos captured in an artificial setting can provide more data, but are characterised by significant differences in appearance compared to real videos and are subject to variations in the looks of the phantoms over time.

Inspired by these issues, the goal of this challenge is to test the possibility of jointly learning robust surgical actions across multiple domains (e.g. across different procedures which, however, share some types of tools or surgeon actions; or, in the SARAS case, learning from both real and artificial settings whose list of actions overlap, but do not coincide). In particular, this challenge aims to explore the opportunity of utilising cross-domain knowledge to boost model performance on each individual task whenever two or more such tasks share some objectives (e.g., some action categories). This is a common scenario in real-world MIS procedures, as different surgeries often have some core actions in common, or contemplate variations of the same movement (e.g. 'pulling up the bladder' vs 'pulling up a gland'). Hence, each time a new surgical procedure is considered, only a smaller percentage of new classes need to be added to the existing ones.

The SARAS-MESAD challenge provides two datasets for surgeon action detection: the first dataset (MESAD-Real) is composed by 4 annotated videos of real surgeries on human patients, while the second dataset (MESAD-Phantom) contains 5 annotated videos of surgical procedures on artificial human anatomies. All videos capture instances of the same procedure, Robotic Assisted Radical Prostatectomy (RARP), but with some difference in the set of classes. The two datasets share a subset of 11 action classes, while they differ in the remaining classes. These two datasets provide a perfect opportunity to explore the possibility of exploiting multi-domain datasets designed for similar objectives to improve performance in each individual task.

Task¶

The task is to jointly learn two similar static action detection tasks from different domains with overlapping objectives. The challenge provides two datasets namely: MESAD-Real and MESAD-Phantom.

MESAD-Real is a set of video frames annotated for static action detection purposes, with bounding boxes around actions of interest and a class label for each bounding box. MESAD-Phantom is also designed for surgeon action detection during prostatectomy but is composed of videos captured during procedures on artificial anatomies ('phantoms'). MESAD-Real contains 21 classes while MESAD-Phantom contains 14 classes. Both datasets have 11 overlapping classes between them.

Evaluation Metric¶

The submission will be evaluated using mean average precision (mAP) for the detections on both datasets. Predictions for both the datasets will be evaluated for [0.1, 0.3, 0.5] Intersection over Union (IoU) values. Mean over all three values for both the datasets will be called Joint mAP and will be used for the ranking of submissions. Leaderboard additionally have: AP at 0.1 IoU for MESAD-Real (realAP-10), AP at 0.3 IoU for MESAD-Real (realAP-30), AP at 0.5 IoU for MESAD-Real (realAP-50), AP at 0.1 IoU for MESAD-Phantom (phanAP-10), AP at 0.3 IoU for MESAD-Phantom (phanAP-30), AP at 0.5 IoU for MESAD-Phantom (phanAP-50).

Objectives¶

There are a huge number of MIS procedures and mostly these procedures share some percentage of objective ( i.e. actions performed by surgeons) between each other. To develop reliable universal Computer-Assisted Surgery (CAS) systems, we need to develop algorithms that can exploit cross-domain knowledge to improve individual tasks. Objective of this challenge is to study the effect of using cross domain knowledge for multi-task learning.

The objective of the task is to predict the bounding boxes and class scores for both of the datasets. Participant teams will aim to improve performance by learning a single model from both datasets (domains). Joint mean Average Precision (mAP) is the evaluation function, which will also be used for the ranking of submissions.

This work received funding

from the European Union’s Horizon 2020 research and innovation programme

under grant agreement No 779813.

This work received funding

from the European Union’s Horizon 2020 research and innovation programme

under grant agreement No 779813.